2018-09-25 14:24:56

Embeddable results

Big data analytics gain value when the insights gleaned from data models can help support decisions made while using other applications. It is of utmost importance to be able to incorporate these insights into a real-time decision-making process," said Dheeraj Remella. The ability to create insights in a format that is easily embeddable into a decision-making platform, which should be able to apply these insights in a real-time stream of event data to make in-the-moment decisions.

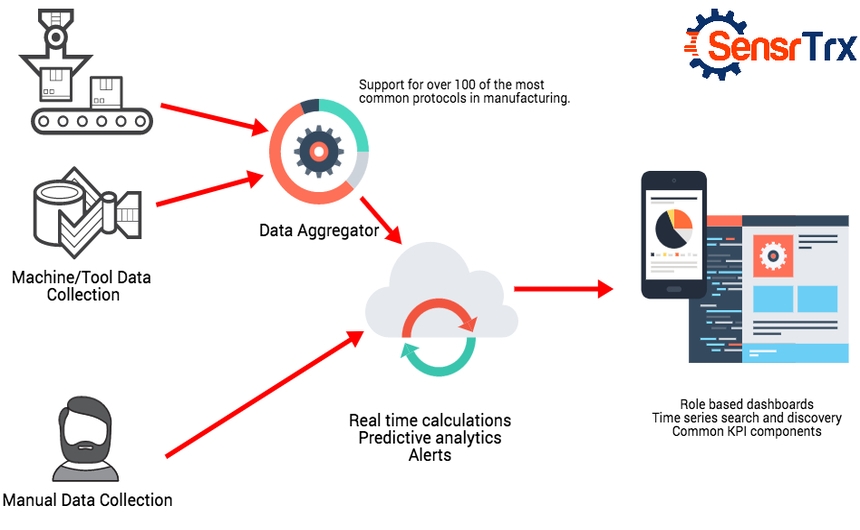

2.Data wrangling cleaning, labeling and organizing data for data analytics involves seamless integration across disparate data sources and types, applications and APIs, cleansing data, and providing granular, role-based, secure access to the data. Big data analytics tools must support the full spectrum of data types, protocols and integration scenarios to speed up and simplify these data wrangling steps

3. Data exploration Data analytics frequently involves an ad hoc discovery and exploration phase of the underlying data. This exploration helps organizations understand the business context of a problem and formulate better analytic questions. Features that help streamline this process can reduce the effort involved in testing new hypotheses about the data to weed out bad ones faster and streamline the discovery of useful connections buried in the data.

Support for different analytics

There are a wide variety of approaches for putting data analytics results into production, including business intelligence, predictive analytics, real-time analytics and machine learning. Each approach provides a different kind of value to the business. Good big data analytics tools should be functional and flexible enough to support these different use cases with minimal effort or the retraining that might be involved when adopting different tools

Scalability

It is required that different data models support high levels of scale for ingesting data and working with large data sets in production without exorbitant hardware or cloud service costs."A tool that scales an algorithm from small data sets to large with minimal effort is also critical,"

Version control

In a large data analytics project, several individuals may be involved in adjusting the data analytics model parameters. Some of these changes may initially look promising, but they can create unexpected problems when pushed into production. Changes to data analytics model parameters by several individuals can result in unexpected results when pushed to production. Version control built into big data analytics tools can improve the ability to track these changes. If problems emerge later, it can also make it easier to roll back an analytics model to a previous version that worked better. "Without version control, one change made by a single developer can result in a breakdown of all that was already created,"

Simple integration

Simple integrations also make it easier to share results with other developers and data scientists. Data analytics tools should support easy integration with existing enterprise and cloud applications and data warehouses.

Data management

Big data analytics tools need a robust yet efficient data management platform to ensure continuity and standardization across all deliverables. A robust data management platform can help an enterprise maintain a single source for truth, which is critical for a successful data initiative.

Data governance

Data governance features are important for big data analytics tools to help enterprises stay compliant and secure. This includes being able to track the source and characteristics of the data sets used to build analytic models and to help secure and manage data used by data scientists and engineers. Data sets used to build models may introduce hidden biases that could create discrimination problems.

Data governance is especially crucial for sensitive data, such as protected health information and personally identifiable information that needs to comply with privacy regulations. Some tools now include the ability to pseudonymize data, allowing data scientists to build models based on personal information in compliance with regulations like GDPR.

Data processing frameworks

Many big data analytics tools focus on either analytics or data processing. Some frameworks, like Apache Spark, support both. These enable developers and data scientists to use the same tools for real-time processing; complex extract, transform and load tasks; machine learning; reporting; and SQL. This is important because data science is a highly iterative process. A data scientist might create 100 models before arriving at one that is put into production. This iterative process often involves enriching the data to improve the results of the models.